When a body of academic literature points to an effective new approach to generate meaningful learning gains for pupils, practitioners take notice. But this may not be the complete story. Timothy Sullivan, Director of Learning Innovation at education provider NewGlobe, shares insights from recent randomised evaluations of SMS outreach to parents and discusses how surprising null results may hint at a broader issue of publication bias.

Can an SMS really generate learning gains?

In recent years, a compelling case has emerged for the use of SMS to provide parents with information about pupil performance or returns to education. But could publication bias (which arises when studies with significant results are published more often than those with null results) be affecting our collective understanding of this promising intervention?

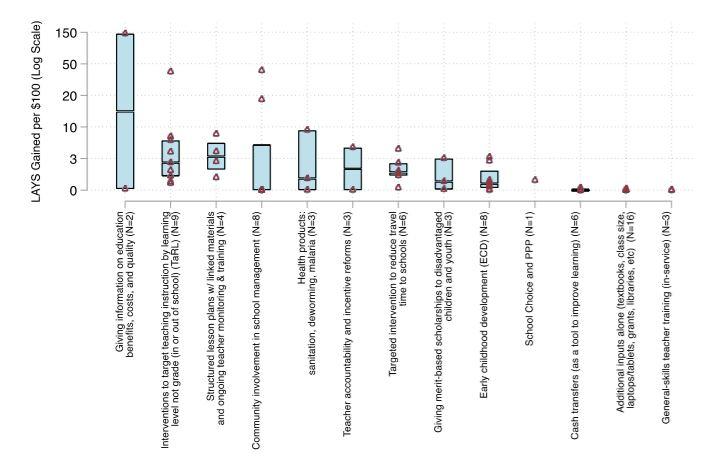

Excellent research in Botswana and Chile has highlighted the positive impact of SMS on learning outcomes and attendance. At first glance, SMS appears to be a sure thing! Simply share information or practice opportunities via SMS, and tap into a cheap approach to generating learning gains. Angrist et al. (2020) highlight the extraordinary cost-effectiveness of providing information to parents in learning adjusted years of schooling (LAYS) relative to other interventions.

Angrist et al. 2020.

But is SMS right for us?

In light of this overwhelming evidence in favour of SMS outreach, NewGlobe’s Learning Collaborative set out to evaluate this strategy in Bridge Kenya. NewGlobe supports visionary governments to improve public education systems through a comprehensive system transformation platform and data-driven educational services. Specifically, NewGlobe supports (1) government education transformation programmes, (2) community school programmes such as Bridge Kenya, and (3) the Learning Collaborative, a research hub focused on applied work in schools that will drive learning for children at a state and nationwide scale.

In 2018-2019, we randomly evaluated an SMS programme among class 3 and class 6 pupils at 225 Bridge Kenya schools. Messages shared personalised information on academic performance (midterm and endterm scores) as well as practice problems. After three terms, we were able to rule out small, positive effects of SMS messages on pupil learning, and even found some evidence of negative effects.

As a follow-on study, we randomly evaluated the impact of SMS messages to support pupils preparing for the Kenya Certificate of Primary Education (KCPE), the primary leaving exam in Kenya. The SMS programme was similar, in that it provided information (in this case, predicted KCPE scores modeled using past performance data) and practice problems (taken from mock-KCPE exams). Again, after 1.5 terms, it appears that there was no effect on achievement (although the analysis is still ongoing).

Perhaps the root of the problem was the measurement instrument – are summative assessments like midterms and endterms insufficiently responsive to detect an impact on behavioural change? To test this, we collaborated with Anja Sautmann (now at the World Bank) and Sarah Kopper at J-PAL to explore whether SMS messaging could be used to encourage a specific behaviour in Bridge Nigeria—in this case, the completion of mobile WhatsApp quizzes on a platform designed by NewGlobe during Covid-19 school closures. We used an adaptive grouping approach in order to evaluate the efficacy of different message structures on quiz-taking behaviour. But once again, we found no effect of SMS messaging on the number of quizzes taken at home.

It’s not you, it’s me?

What are we to make of these results, which fail to confirm a very consistent narrative in the literature? Have we encountered a limitation of SMS outreach? Or just a lack of relevance in a Bridge Kenya context? There are numerous possible explanations for these repeated null results, among which are:

- Bridge Kenya parents are desensitised to SMS messages—they receive a large number of messages from the school, telecom providers, and other bulk SMS services. The messages just don’t “stick.”

- There is an impact on learning, but the instrument being used (midterm or endterm exams; quizzes taken) is insufficiently sensitive to detect these changes.

- We are using an outdated mode of communication (SMS), when parents are shifting towards new forms of interaction (WhatsApp, etc.).

- The substance of the messages is somehow different from those successfully used in other cases (focusing on achievement rather than attendance, for example).

- The setting in which we are messaging (country; school type; grade level; population of parents and pupils) is fundamentally different from contexts in which positive effects of SMS were discovered.

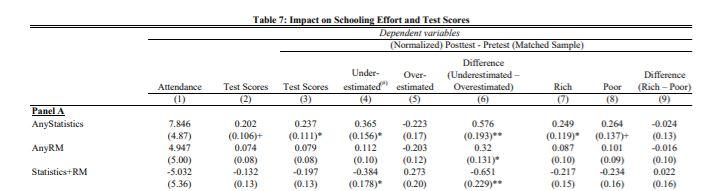

But these explanations likely tell only part of the story. It is useful here to turn to other literature on non-SMS parent engagement. Nguyen (2008) studied the effects of providing either (1) statistics on returns to education (in terms of future employment and earning potential) or (2) a role model demonstrating a success story (both during a 20-minute parent meeting). Providing statistics resulted in more accurate parent perceptions on returns to education and improved test scores by 0.2 standard deviations (and 0.37 standard deviations for those parents who underestimated the returns to education).

On the surface, these results strengthen the hypothesis that providing information (either via SMS or in-person) generates low-cost returns. But on closer examination, we find that for parents who overestimated returns to education, the treatment had a negative effect on their children’s learning (0.22 standard deviations), although these effects were not significant and should be treated with some caution.

Nguyen 2008.

Because Bridge Kenya schools are private, where parents pay tuition, parents likely have different perceptions on returns to education relative to parents enrolling their children in government schools. These parents may track their child’s academic performance more closely or meet with the teacher more frequently.

Are we telling the complete story?

Clearly, information provision via SMS or through other channels is complex and nuanced—such interventions are not always effective, and even when they are, they impact different groups and outcomes in different ways. It undoubtedly deserves to be part of the conversation around low-cost interventions to promote learning. But it is not a panacea, and is not effective in all contexts. Nguyen’s (2008) findings show that for particular parents who overestimate returns to education, information provision might actually be harmful!

There is a dissonance between our findings at NewGlobe and the narrative in the literature. As both a researcher exploring these questions and a practitioner grappling with the extent to which we should be implementing these interventions, I find myself wondering whether we are receiving the complete story. Are there other unpublished studies where SMS produced null or negative results?

At NewGlobe, we have run 18 randomised evaluations within our own context during the past 2 years (on parent engagement, but also on instructional design and teacher support). During this time, we’ve learned (among other things) that the incumbent is extraordinarily difficult to beat! Some new ideas (like interleaving, for example), ‘work’ in our context. But for many of the evaluations that we’ve conducted, we’ve found no effect or small negative effects relative to the status quo.

As a practitioner, there is no denying that null effects can be disheartening. We invest considerable energy in learning about problems confronting our pupils, researching possible solutions in the literature, piloting those solutions extensively in our own context, and randomly evaluating the programme over the course of an academic year. On further reflection, however, a null effect can also be encouraging. It means that our current approach is equally effective as another more novel intervention. In addition, null effects ensure that we do not proceed blindly in implementing an ineffective (and potentially costly) approach.

We also believe that null effects have value beyond our own organisation’s decision-making processes. Others can learn as much from our studies that fail to find an effect as from those that do find a positive effect. We are working closely with external academic teams to publish our findings (both positive outcomes, but also null and negative results) to ensure that other practitioners can benefit from our experience and from the lessons that we have learned.

How can we address publication bias?

Of course, it is possible that we are unique in encountering so many null results—however, I suspect not! Rather, I suspect that there is a publication bias towards positive findings. Polanin, Tanner-Smith, and Hennessy (2016) carried out a meta-review of 6,392 studies between 1986 and 2013, and found that published studies were significantly more likely to show positive results than unpublished studies. Chow and Ekholm (2018) found similar results, with published studies reporting positive results more frequently and of a higher magnitude compared with unpublished studies.

I am not actively involved in the world of publishing (either writing or reviewing articles for publication). But I can appreciate the allure of publishing positive results, especially on novel and groundbreaking interventions like low-cost SMS outreach. As a reader and as someone who works in academic innovation, they’re certainly exciting to consume! But it is essential that academics and practitioners publish their results, regardless of whether the effects of the intervention being tested were positive, null, or negative.

Trial registration is an important step towards more representative dissemination of evaluation outcomes. In addition to facilitating the discovery of published and unpublished research, trial registries also begin to address publication bias through public registration of an evaluation before its completion (ideally, before it even begins). Several studies of, or in collaboration with, NewGlobe programmes have been registered in the AEA RCT Registry (here, here, here, and here, for example). The transparency encouraged by this and similar registries (such as EGAP’s Registry, ICPSR’s REES, 3ie’s RIDIE, and the Open Science Framework (OSF), among others), in conjunction with more robust efforts to publish null results, are important steps towards reducing publication bias and contributing towards a broadly representative academic literature.

To this end, J-PAL runs the AEA RCT Registry and works closely with other organisations that promote research transparency, including the Berkeley Initiative for Transparency in the Social Sciences (BITSS), Center for Open Science, Innovations for Poverty Action (IPA), and International Initiative for Impact Evaluation (3ie), among others. J-PAL additionally has a set of practical resources for researchers looking to register a study or publish data.

NewGlobe is in a unique position: we operate at a sufficient scale to evaluate such interventions in our own context before taking up any new approach. But for smaller organisations or school networks, they are forced to rely more heavily on the literature in order to determine whether an intervention like SMS outreach should be adopted. If only positive findings are published, we and other implementing organisations risk overinvesting in ineffective interventions in response to a non-representative sample of studies.

It is difficult to know how many unpublished evaluations of SMS have found null or negative results (especially for those studies where the findings were never even written up). But so long as these studies are of a high quality, they undoubtedly deserve a place in the literature. Null findings, while potentially less intriguing, are no less important for organisations. It is our collective responsibility, as a community of researchers and practitioners, to share what we have learned in an open, honest, and humble way in order to serve communities, parents, and children in the most effective way possible.